Stepping through the looking glass of privacy-enhancing technologies

April 1, 2024 | By Jonathan Anastasia and Derek Ho

In Lewis Carroll’s novel “Through the Looking-Glass,” Alice steps into an alternate world where things do not work in the usual way. Time runs backwards. Running helps one remain stationary.

The world of privacy-enhancing technologies, or PETs, offers what may seem to be similarly counterintuitive possibilities. Being able to answer a question without knowing what the question is. Gleaning the plot of a book without needing to open it up.

That is the increasingly present reality of PETs, an umbrella term that comprises techniques, methods and processes that can mitigate privacy and security risks when it comes to data use and sharing. It allows organizations to extract value from data without the need to use or access the raw data itself, preserving confidentiality and consumer privacy by limiting access to sensitive and identifying information. Though not a silver bullet, it is another tool in the toolbox that allows organizations to technologically ensure and quantitatively demonstrate the enablement of privacy and innovative data use at the same time.

Time to get a PET

While PETs have been around for a while, they have largely been a frontier technology that only a select number of regulators and a few companies in the private sector have had an interest in exploring and the luxury of doing so. But that has changed in recent years.

The Biden-Harris administration’s executive order on artificial intelligence last year included an entire section on accelerating the development and use of privacy-preserving technologies, promoting the adoption of such technologies by federal agencies, and developing guidelines to evaluate the effectiveness of these techniques.

At a global level, the G7 Data Protection and Privacy Authorities in 2023 endorsed a plan that included promoting the development and use of emerging technologies, including PETs, that can build trust and protect privacy.

Some policymakers and regulators are also probing and pioneering in this space. The U.K. Financial Conduct Authority has set up a Synthetic Data Expert Group, which includes Caroline Louveaux, Mastercard’s chief privacy and data responsibility officer, to explore the use of synthetic data in financial markets. In Singapore, the Infocomm Media Development Authority has an ongoing PETs sandbox for organizations to test practical use cases against regulatory requirements.

At Mastercard, we are also helping to bring these technologies to practical applications that provide a real benefit for countries, companies and individuals. For example, we participated in the Singapore government’s PETs sandbox, where we successfully demonstrated how the use of fully homomorphic encryption, or FHE, facilitates the sharing of financial crime intelligence across borders.

Financial crime is a significant threat to countries’ economies and reputations, with the U.N. Office on Drugs and Crime estimating that $800 billion to $2 trillion is laundered globally annually. But sharing of financial information, especially across borders, is hindered by legal restrictions relating to privacy, cross-border data transfers, banking secrecy and prohibitions against “tipping off” in anti-money-laundering laws. Criminals are able to hide in the shadows, disguised by frictions that are inadvertently created by the inability to share information about suspicious activity.

More cross-border financial crime intelligence sharing, enabled by the use of FHE, could disrupt criminal network activity and improve detection rates (for example by reducing false positives), which could dissuade criminals from targeting financial institutions.

Our use of FHE in the Singapore sandbox allowed us to identify suspicious accounts in multiple data stores while addressing and mitigating the legal compliance risks. Information and queries were encrypted to a level that is quantum-computing secure. Tipping off the criminals was not a concern, as queries and entities in the query-response chain were unknown to the other parties in the data exchange. And encrypted queries containing financial information did not persist for more than a few seconds outside of the country of origin. This proof point, among others, suggests that we can have our proverbial privacy and innovation cake and eat it at the same time.

Challenges exist, but they are not insurmountable

All frontier technologies will have their share of challenges, and PETs are no different. Processing complexity means that, for now, FHE is more suited to needs requiring a response measured in seconds rather than milliseconds. That said, performance times will improve through continued advances in computational power, and not all use cases require instantaneous or near-real-time processing. Furthermore, as we demonstrated in our Singapore case study, careful construction of queries makes the process more efficient.

While more regulators are investigating the efficacy of PETs, private-sector participants could benefit from risk-based guidance by privacy and sector regulators that describes how PETs could help to satisfy the requirements under their respective local laws. Such guidance should offer industry players clarity over any specific concerns that can then be addressed through other complementary controls in addition to PETs. This regulatory transparency and encouragement cannot be understated, given the cost and complexity of implementation of any new technology. Efforts like the Singapore sandbox help to build the evidence base of practical examples of how the technology could work in a regulatory framework.

Exciting times ahead

While challenges exist, it may be useful to recall the words of Alice’s father in Tim Burton’s “Alice in Wonderland” screen adaptation: “The only way to achieve the impossible is to believe it is possible.”

It is possible that privacy and innovative data use can be achieved at the same time. But this possibility needs to be realized through open collaboration between regulators and industry, the regulatory courage to test and adapt the technology and, if necessary, the willingness to adapt the regulatory environment to encourage its use. In an age of increasing distrust about the use and sharing of data, it is in our collective interest to encourage the use of PETs. The tools exist. We just need to step forward together to continue to make it a wider reality.

Jonathan Anastasia is executive vice president for crypto services and financial crime at Mastercard. Derek Ho is assistant general counsel for privacy and data protection at Mastercard.

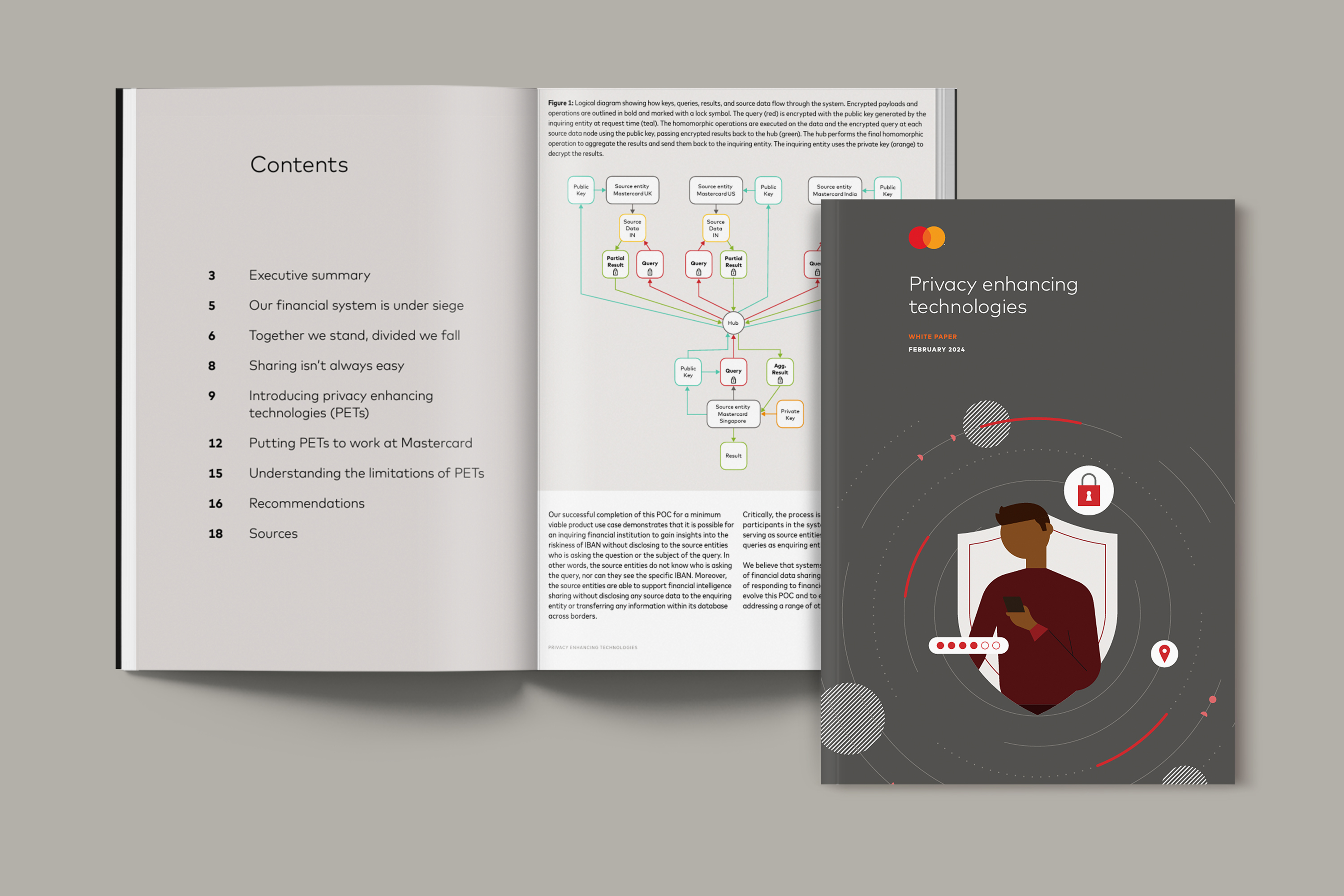

White paper

Exploring privacy-enhancing technologies

New research shows how we can unlock the potential for PETs to be a meaningful tool in our collective fight against the increasing speed, sophistication, and geographic scope of financial crime.

Read more